An Interview Experience with Microsoft R&D

![udayarumilli_Interview_Experience_With_Microsoft_R&D_1]()

One of my close friend recently joined Microsoft R&D as a “Senior SDE (Software Development Engineer). He was very excited as it’s his dream place to work and he also wants to share his interview experience with Microsoft R&D. Here is the overall process in his own words.

I have got a call from Microsoft division and told me that they have got my profile from a job portal and wanted to check with me if I am looking for job change.

I said yes to proceed ahead. 3 days later I got a call from MS staff and I need to go through a general discussion. A telephonic interview scheduled and it went on for 45 Min. Discussed about my experience, projects, technology, current role etc.

Later 3 days I was informed that my profile got shortlisted and scheduled a face to face interview.

I have reached MS OFFICE on time, given my profile, got Microsoft visitor Badge and was being waiting for the interview call.

Someone from staffing team came to me and took me to the Interviewer cabin.

He introduced to me to the Interviewer.

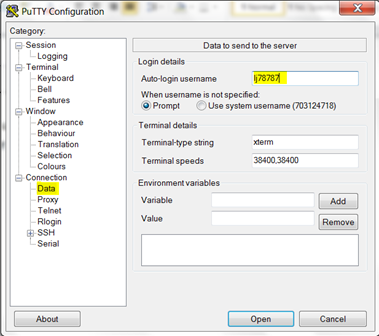

Day – 1

Technical Interview – 1

![udayarumilli_Interview_Experience_With_Microsoft_R&D_2]()

Interviewer: This is XXXXX, how are you doing?

Me: I am very fine and actually excited as this is the first time and I am giving my interview

Interviewer: That’s ok, you just relax we’ll start with a general discussion. Can you tell me about your experience?

Me: Have explained how I have been handling projects, how good I am in coding, designing and troubleshooting and given brief details about my current role and previous experience.

“He didn’t interrupt me till I finish this”.

Interviewer: What are you more interested in? Coding, designing or troubleshooting? You can choose anything where you feel more comfortable……

Me: Well, I am good in database development and MSBI stack.

Interviewer: Have you ever tried to know the SQL Server architecture?

Me: Yes! I have an idea on component level architecture.

Interviewer: Ohh that’s a good to know, can you tell me why a SQL developer or any DB developer should know the architecture? Means what is the use in getting into architecture details?

Me: I have given the answer why a sql developer or a DBA know architecture and taken an example. “A simple requirement comes and a SQL Developer needs to write a stored procedure”, I have explained how a person who understand architecture deals the requirement.

Interviewer: What is latch Wait?

Me: Answered!

Interviewer: What is column store index? Have you ever used it?

Me: Explained!

Interviewer: What is the difference between Delete and Truncate commands?

Me: I have given basic differences….

Interviewer: Is Delete and Truncate are DDL or DML?

Me: Delete is DML and TRUNCATE is DDL

Interviewer: Why TRUNCATE DDL?

Me: Given the answer

Interviewer: In application one of the page is giving timeout error, what is your approach to resolve this?

Me: I answered by giving detailed information on how to find the actual problem, have to quickly check and confirm with which layer the problem is with: Application, Webservice, Database services and we can provide resolution based on the problem.

Interviewer: Ok, you confirmed that Application and webservice are fine and the problem is with database server. Now what is your approach?

Me: Quickly check few primary parameters “Services”, “Memory”, “CPU”, “Disk”, “Network”, “Long Running Queries” using DMV’s, Performance monitor, performance counters, profiler trace or if you are using any third party tools.

Interviewer: Ok, all parameters are fine except there is a procedure which is causing this problem. This procedure was running fine till last day but it suddenly dropping the elapsed time. What are the possible reasons?

Me: A data feed might happen which causes a huge fragmentation, or nightly index maintenance might failed, statistics might be outdated, other process might be blocking

Interviewer: Ok, all these parameters are fine, no data feed happened, no maintenance failed, no blocking and statistics are also fine. What else might be the reason?

Me: May be due to a bad parameter sniffing

Interviewer: What is bad parameter sniffing?

Me: Explained what the bad parameter sniffing is

Interviewer: What is your approach incase of bad parameter sniffing?

Me: Answered the question

Interviewer: Ok, it’s not because of parameter sniffing, what else might be causing the slow running?

Me: Explained scenarios from my past experience. There are few “SET” options also might cause the sudden slow down issues.

Interviewer: All required SET options are already there, are there any other reasons you see?

Me: ……!!! No I am not getting any other clue

Interviewer: That’s ok no problem

Interviewer: Can you draw SQL Server Architecture?

Me: Have drawn a diagram and explained each component

Interviewer: You joined a new team. There are a lot of deadlocks in that application and you are asked to provide a resolution. What is your approach?

Me: Have explained 3 things, what is deadlock, how to identify the deadlock information and how to prevent from deadlocks and how to resolve deadlock situations, deadlock related traces, deadlock priority etc.

Interviewer: Ok, you found that there is an indexID that is causing the frequent deadlocks. Can you be able to find what type of index from ID?

Me: I have explained how to identify type of index from index id 0, 1, 255

Interviewer: Have you seen any lock type information in deadlock log?

Me: Yes I have! And explained about SH_, UP_, PR_ etc

Interviewer: Any idea about Lazywriter?

Me: Answered

Interviewer: How checkpoint is differentiated from Lazywriter?

Me: Explained in detail

Interviewer: Any idea about Ghost cleanup?

Me: Yes! Explained

Interviewer: Why to use a VIEW?

Me: Explained

Interviewer: Order By is not works in a view right, can you explain why?

Me: I know it does not work but not sure why it’s does not work properly

Interviewer: Have you ever worked on Indexed views?

Me: Yes

Interviewer: Can you tell me any two limitations of an Indexed view?

Me: Given limitations: Self Join, Union, Aggregate functions etc.

Interviewer: Have you ever used Triggers?

Me: Yes!

Interviewer: Can you explain the scenario’s when you used Triggers and Why Triggers used?

Me: Explained in detail

Interviewer: What are the alternatives for a Trigger?

Me: Given some scenarios ex: Using Output clause, implementing through procedures etc

Interviewer: What are the top 2 reasons to use a Trigger? And not to use a Trigger?

Me: Explained scenario’s where there is no alternative better than Triggers and also explained cases where performance impacted when using a Trigger.

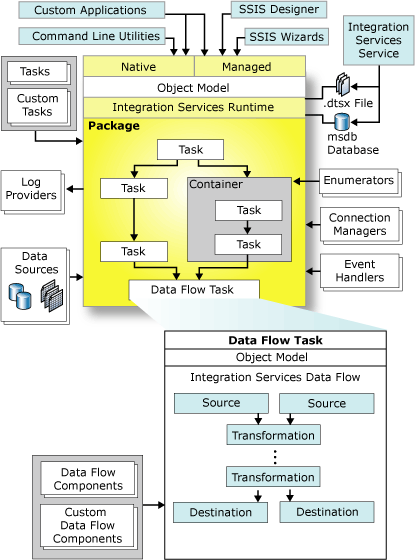

Interviewer: You are asked to tune a SSIS package, what is your approach?

Me: Have explained all possible options and parameters we need to check and improve starting from connection managers, control flows, data flow, fast loads, transformations and other parameters.

Interviewer: Your manager asked you design and implement an ETL process to load a data feed to data warehouse on daily basis. What is your approach?

Me: Explained how I design a new process and implementation steps

Interviewer: Have you ever used stored procedures in source in any data flow?

Me: Yes I have used

Interviewer: Ok you need to execute a stored procedure and use the result set as source. What is your approach?

Me: Explained in detail on paper

Interviewer: Can you explain what are the basic reasons that results into incorrect meta data from stored procedure?

Me: Have explained various cases. Ex: When using dynamic queries, when SET NOCOUNT OFF etc.

Interviewer: Ok, how do you deal / map column names when your procedure is using a full dynamic query?

Me: Explained in detail. Ex: SET FMTONLY, using a script task etc.

Interviewer: Ok XXXXX I am done from my side, any questions?

Me: Asked about the position and responsibilities.

First Round Completed. After 15 min wait Staff member came to me and asked me to wait for the next round.

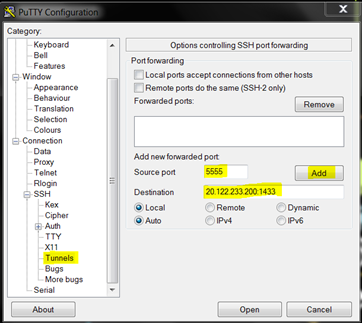

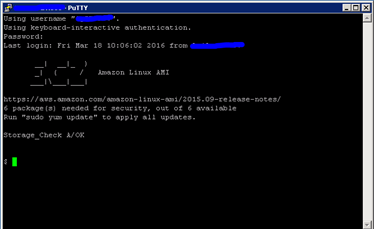

Technical Interview – 2

![udayarumilli_Interview_Experience_With_Microsoft_R&D_3]()

I really felt very relaxed and confident as I given answers to almost all of the questions. After 20 more min I got a call from Interviewer, he came to lobby and called my name. I introduced him and he offered me a coffee. We both had a coffee and had to walk for 3 min to reach his cabin. Meantime we had a general conversation.

I already had a long discussion in first round and this guy seems like a cool guy. I felt more confident and here is the discussion happened in second round:

Interviewer: Hay, just a moment

Me: No problem! (He is started working on his laptop)

Interviewer: Can you tell me something about your experience and current role?

Me: Started giving details about my experience and current responsibilities

Interviewer: Hay, don’t mind as I am not looking at you, it doesn’t mean that I don’t here you, please continue

Me: Have explained about my education background

Interviewer: Ok XXXXX, we’ll start from a simple topic then we’ll go through complex things

Interviewer: On which basis you will define a new full text index?

Me: Well I am not really sure on this (This is really unexpected, I really felt bad as missing the first question, is this a simple question? )

Interviewer: Hmm, what are the T-SQL predicates involved in full text searching?

Me: I am not sure as I didn’t get a chance to work on full text indexing but I remember one predicate “Contains”. (From this question I got to understand that he is trying to test my stress levels)

Interviewer: Ok, there is a transaction which is updating a remote table using linked server. An explicit transaction is opened and the database instance is on Node-A. Now actual update statement is executed and a failover happened to Node-B before commit or rollback. What happens to the transaction?

Me: I tried to answer. I know how a transaction will be handled in case of a cluster node failover but not sure in this case as it’s a distributed transaction. (He is typically trying to fail me )

Interviewer: Ok, you know any enterprise environment is having various environments right, like Dev, Test, Stag, Prod. You have developed code in development environment and it needs to be moved to test. How the process happens?

Me: Explained about versioning tools and how we moved code between environments

Interviewer: Hmm, ok your project doesn’t have a budget to use a versioning tool and the entire process has to be go manual. Can you design a process to move code between environments?

Me: Explained various options. Like having a separate instance and replicating code changes and versioning using manual code backup processes.

Interviewer: If we need to move objects between environments how do you priorities objects?

Me: Explained! Like first move user defined data types, linked servers, synonyms, sequences, tables, views, triggers, procedures, functions etc.

Interviewer: Do you know what composite index is?

Me: Explained!

Interviewer: Ok, while creating composite index on which basis we need to choose order of the columns?

Me: Explained with an example.

Interviewer: What is the difference between Index selectivity and index depth?

Me: Explained! These two are totally different terms …..

Interviewer: Can you draw table diagram when a partition created on that?

Me: Could not answer

Interviewer: What is an IAM Page?

Me: Index Allocation Map…..

Interviewer: How could you identify query which is causing blocking

Me: I explained how we capture query information from sql handle

Interviewer: Ok, let’s say when you find the blocking query is a stored procedure and the procedure is having 10000 lines of code, can we be able to get the exact query which is causing a block?

Me: I remember there is a way that we can do using statement_start_offset,statement_end_offset from a DMV DM_EXEC_REQUESTS.

Interviewer: Ok, if the blocking query is from a nested stored procedure or with in a cursor, can we still be able to get the exact query details?

Me: I am not sure on this…

Interviewer: No problem, leave it

Interviewer: How are you comfortable with SQL coding?

Me: Yes! I am good in SQL coding

Interviewer: Can you write a query to split a comma separated string into a table rows

Me: I have written a query using CHARINDEX and SUBSTRING

Interviewer: Ok, now we need to implement the same logic for all incoming strings, can you explain how?

Me: I wrote a user defined function and using that function in main procedure

Interviewer: Can you tell me why you used a function here? What are the plus and minus in using functions?

Me: Explained about reducing code redundancy and may impact on performance

Interviewer: Ok, so there is a performance impact while dealing with large datasets if we use functions. Now write a query to do the same job without using a user defined function.

Me: Wrote a query by adding xml tags to the string and cross applying with nodes().

Interviewer: Is there any way that we can do?

Me: I am not getting…..

Interviewer: That’s ok! Can you write a query for reverse scenario? Form a comma separated string from column values.

Me: Written a query…

Interviewer: Have you ever used query hints?

Me: Yes! I have

Interviewer: Can you explain what are all those and in which scenario you used?

Me: Explained Recompile, No Expand, Optimize for etc

Interviewer: Ok, which is the best way, leaving optimization part to optimizer or controlling from developer end?

Me: Explained reasons for using query hints and finally justified that the optimizer is always right in a long run.

Interviewer: Ok, What are the other query hints you used?

Me: I know few more query hints but I didn’t use them, explained other query hints

Interviewer: Any idea about statistics in SQL Server?

Me: Yes! Explained about the statistics

Interviewer: Ok there are two options in updating statistics right, which is the best option?

Me: It’s not we can directly define the best option, it depends on the environment and data inserts / updates and explained how to choose the best option in both cases OLTP and OLAP

Interviewer: Ok, what is synchronous and asynchronous auto update statistics?

Me: Explained in detail.

Interviewer: How do you know if statistics are outdated?

Me: Given a query using STATS_DATE or from actual and estimated row counts or sp_spaceused may give wrong results.

Interviewer: How do we update statistics? In our production there is a huge traffic expected between 11 AM and 5 PM, is there any impact if we update statistics in between 11 and 5?

Me: Yes there might be chances that impact the procedure / query execution. It might speedup or slower the execution.

Interviewer: What do you know about ISOLATION levels?

Me: Explained about all available isolation levels

Interviewer: What is the default Isolation level?

Me: Read Committed

Interviewer: What is the problem with Read Committed isolation level?

Me: Non-repeatable Reads and Phantom Reads still exists in READ COMMITTED

Interviewer: Have you ever used “WITH NOLOCK” query hint?

Me: Yes! Explained about it

Interviewer: Ok, so do you mean that when specifies NO LOCK, there is no lock is being issued on that table / row / page?

Me: No! It still issues a lock but that lock is totally compatible with other low level locks (UPDATE, EXCLUSIVE).

Interviewer: Are you sure that is true? Can you give me an example?

Me: Yes I am sure let me give you an example. Let’s say we have a table T with a million rows. Now I issued a command “SELECT * FROM T” and immediately in other window I executed a command “DROP TABLE T”. DROP TABLE will wait till the first executed select statement is completed. So it is true that there is lock on that table which is not compatible with the schema lock.

Interviewer: What is the impact “DELETE FROM T WITH (NOLOCK)”?

Me: Database engine ignores NOLOCK hint when using in from clause with UPDATE and DELETE

Interviewer: What is Internal and external fragmentation?

Me: Explained in detail.

Interviewer: How do you identify internal and external fragmentation?

Me: Using INDEX PHYSICAL STATS and explained about AVG FRAGMENTATION %, AVG PAGE SPACE USED.

Interviewer: There is a simple relation called “Employee”. Columns Emp_ID, Name, Age. Clustered index on Emp_ID, Non-Clustered index on Age. Now can you draw the Clustered and Non-Clustered index diagram? Fill the values for Leaf level and non-leaf nodes. Let’s assume there are total 20 records and id’s are sequential starting from 1001 to 1020 and Age Min Age 25, Max Age 48.

Me: Drawn a diagram by filling all employee ID’s in clustered index. Also Drawn non clustered index by filling all clustered index key values.

Interviewer: Has given 3 queries and asked me to guess what kind of index operation (SCAN / SEEK) in execution plan

Me: Given my answers based on the key columns used and kind of operation (“=”, “LIKE”)

Interviewer: What is a PAD INDEX option? Have you ever used this option anywhere?

Me: While specifying fill factor if we mention PAD INDEX same fill factor applies to NON-LEAF level nodes as well.

Interviewer: Can you write a query for the below scenario.

Previous_Emp_ID, Current_Row_Emp_ID, Next_Emp_ID

Me: Given the answer “Using LEAD and LAG”

Interviewer: How do you write query in SQL Server 2005?

Me: Written query using “ROW_NUMBER()”

Interviewer: Write the same query in SQL Server 2000

Me: We can do this but have to use a temp table with Identity column to hold the required data and by self joining the temp table we can get the required resultset.

Interviewer: Can you be able to write this without temp table?

Me: Am not getting …….

Interviewer: I am done from my side do you have any questions foe me?

Me: Actually nothing, I have got clarity on job roles and responsibilities.

Best thing I observed is “Each Interviewer passes his feedback to the next level” based on that feedback you will be asked questions.

But this is the longest interview I never attended. Mostly he tried to put me in trouble and tried to fail me to answer the question. What I understand, in second round my stress levels were tested almost for each question he was very strong and cross checking and confirming back, I could hear “Are you sure?”, “Can you re think?”, “How do you confirm?” etc.

I had some water and was waiting for the feedback. One of the staff members came to me and told me that I need to go through one more discussion. I was very happy to hear and have got a call from Interviewer after 45 min.

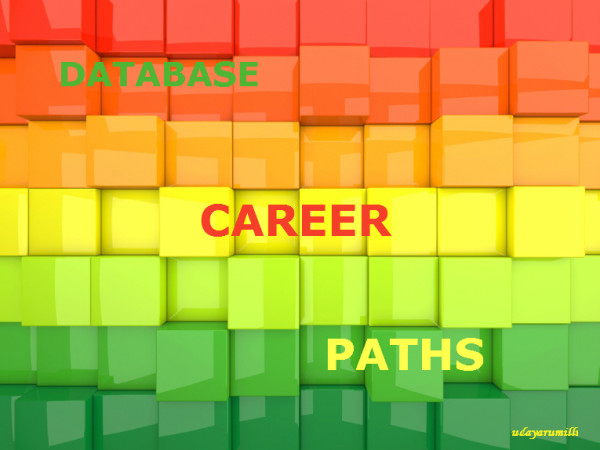

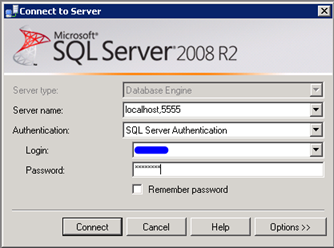

Technical Interview – 3

![udayarumilli_Interview_Experience_With_Microsoft_R&D_4]()

Interviewer: Hay XXXXX, How are you?

Me: I am totally fine……thanks!

Interviewer: How is the day today?

Me: Yah it’s really interesting and I am so excited.

Interviewer: I am XXXX and working on BI deliverables, can you explain your current experience

Me: Explained!

Interviewer: Why did you choose database career path?

Me: Given the details how I got a chance to be in database career path.

Interviewer: Have you ever design a database?

Me: Yes! Explained the project details

Interviewer: Ok what are the phases in designing a new database?

Me: Explained in detail! Conceptual, Logical, Physical design.

Interviewer: Giving you a project requirement, can you be able to design a new database?

Me: Sure, will do that.

Interviewer: Take a look at these sheets, understand the requirement, choose a module, create data flow map, design OLTP tables and OLAP tables. I am giving you an hour time. Please feel free to ask me if you have any questions.

Me: Sure, we’ll ask you if any questions. For me it took 1 and half hour to understand and to design tables for a simple module.

Interviewer: Can you explain the data flow for this module?

Me: Explained!

Interviewer: Can you pull these 5 reports from the designed tables?

Me: I wrote queries to fulfil all those report requirements. Luckily I could be able to pull all reports from the created schema.

Interviewer: Can you tell me the parameters to be considered in designing a new database?

Me: Explained various parameters needs to be considered category wise. For example Performance, Scalability, Security, Integrity etc (1 or 2 examples for each category).

Interviewer: What is Autogrow option? How do you handle this?

Me: Explained about Autogrow option and explained 2 modes and their uses

Interviewer: You worked with versions from SQL Server 2000 to 2012 correct?

Me: Yes!

Interviewer: Can you tell me the features which are in 2000 and not in 2012?

Me: This is strange, you mean to say deprecated features which are in 2000 not in 2012?

Interviewer: Exactly

Me: Well, I believe 90% things got changed from 2000 to 2012, anyways will give you answer “Enterprise Manager” , ”Query Analyzer”, “DTS Packages”, “System Catalogs”, “Backup Log with Truncate Only” etc.

Interviewer: Can you tell me top 5 T-SQL features that you most like which are in 2012 and not in 2000?

Me: Sequences, TRY / CATCH / THROW, OFFSET / FETCH, RANK / DENSE_RANK / NTILE, CTE, COLUMN STORE INDEX etc.

Interviewer: Have you ever worked on NO SQL database?

Me: No I didn’t get a chance to work on No SQL

Interviewer: Any idea about Bigdata?

Me: Explained about Big data and how it is getting used in analytics. (I expected this question and got prepared). Talked on Document DB, Graph DB, Key based, Map Reduce etc

Interviewer: Ok, will you work on No SQL database if you will be given a chance?

Me: Sure will do.

Interviewer: What is Microsoft Support to Bigdata in latest SQL Server?

Me: Explained details on ODBC drivers and plugin for HIVE and HADOOP (I prepared on this as well)

Interviewer: What is the maximum database size you used?

Me: I answered

Interviewer: What are the top parameters you suggest while designing a VLDB?

Me: Parallelism, Partitions, Dimensional / De-Normalizing, Effective Deletes, Statistics Manual Update

Interviewer: Fair enough, any questions?

Me: Yes I have one, in a very short span we can see SQL Server 2016 is in pipeline, may I know the major features in sql server 2016.

Interviewer: (Explained!) Any other questions?

Me: Nothing, but thanks.

Interviewer: Thanks for the patience, please be wait in lobby.

I sat down for 10 min, had a coffee, I was too tired. I had to wait for another 30 min and then one of the staff members told me that I can expect a call on next week. I drove to the home and had long bath . I knew I hadn’t been perfect in answering all questions and but I just gone through 9 hr’s of interview which is a longest interview in my experience.

On Monday I was so curious to know about the result and was being waiting for the call. I was disappointment as I didn’t get call till Tuesday. Finally I have got a call on Wednesday by saying that there is a discussion scheduled for Friday.

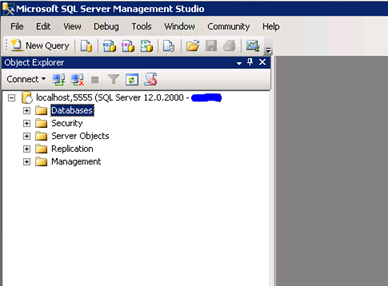

Day -2

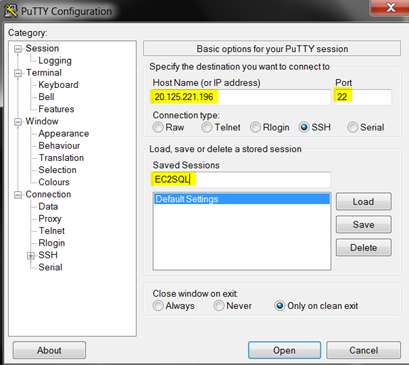

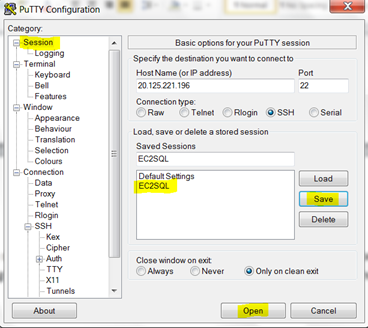

Technical Interview – 4

![udayarumilli_Interview_Experience_With_Microsoft_R&D_5]()

I reached MS campus and then took a local shuttle to reach the R&D building. I was so curious and wanted to know what kind of a discussion it was. HR told me that there might be another round of technical discussion followed by a behavioral discussion.

I have got a visitor badge and was being waiting in visitor lobby. Interviewer came to me and took me to his cabin.

Interviewer: Hi buddy how re you today?

Me: Hah, am very fine thanks, how are you?

Interviewer: Me pretty good man, can you introduce yourself?

Me: Explained (the same for the 4th time)

Interviewer: Ok what is your experience in SQL?

Me: Given detailed view on sql development experience

Interviewer: Can you take this marker. I’ll give you few scenarios you just need to write a query. You can use any SQL language where you feel more comfortable.

Me: Sure (I took the marker and went to the board)

Interviewer: We have an employee table and columns are (EmpID, Name, Mgr_ID, Designation). I’ll give you input manager name “Thomos”. Now I want you to write a query to list all employees and their managers who reports to the manager “Thomos” directly or indirectly.

Me: Have asked some questions like what is the MgrID for top level employees Ex: CEO and how do we need to display NULL values etc. Wrote a query using recursive CTE.

Interviewer: Write a SELECT query syntax and place all clauses that you know

Me: Wrote syntax, included all possible clauses (Distinct, TOP, WHERE, GROUP BY, ORDER BY, Query Hints etc)

Interviewer: I have a table called T. In that each record should be inserted twice. Now the requirement is write a query to remove rows which are more than twice?

Me: Have written a query using ROW_NUMBER in CTE.

Interviewer: How do you do this in SQL Server 2000?

Me: Given a query that can run in 2000

Interviewer: Have you ever used covering index?

Me: Yes in lot of situations.

Interviewer: Ok, why covering index and when we need to use?

Me: Explained

Interviewer: Ok, then what is the difference between Covering Index, indexed view and composite index?

Me: Explained in detail, how and when we need to use those with examples

Interviewer: What is top advantage in using the covering index?

Me: It’s performance gain for covering index usage queries, mostly on analytics databases

Interviewer: What is the disadvantage in using covering index?

Me: Two reasons one is “Slow down INSERT/UPDATE/DELETE” and the other one is “Extra Space”

Interviewer: Ok, what is the main impact in adding more columns in covering index include list?

Me: It increases the Index Depth and then need to traverse the entire tree etc…

Interviewer: That’s fair enough, what exactly the Index Depth is?

Me: Explained!

Interviewer: We have 30 distributed systems located across the globe. All are maintaining sales information. Each system follows its own schema. Now I am assigning you to build a centralized data warehouse. What is your approach? How do you design the process?

Me: Took 15 min and explained with a diagram. Created three tier architecture using “Source”, “Stag”, “Destination”. Explained data flow between source to destination through staging. Discussed all on Technical Mapping, Data Flow, package design, Integrity, Performance, Business Rules, Data Cleansing, Data validations, final loading and reporting.

Interviewer: That’s fair enough.

Interviewer: You are assigned to have a look at a slow performing stored procedure. How do you handle that?

Me: Explained in detail, execution plan, statistics etc

Interviewer: Which is better Table Scan or Index Seek?

Me: Explained, it depends on data / page count. Sometimes Table Scan is better than index seek.

Interviewer: Can you give me a scenario where Table Scan is better than index seek?

Me: I have given an example.

Interviewer: One of the SSRS reports is taking long time, what is your approach and what are all the options you look for?

Me: Explained step by step to tune a SSRS report. Discussed about local queries, data source, snapshot, cached report etc

Interviewer: Data feeds are loading into a data warehouse on daily basis, requirement is once data load happens a report has to be generated and the report needs to be delivered to email for a specific list of recipients. What is your approach?

Me: We can use the data driven subscriptions by storing all required information in sql server database and it can help us in dynamic report configurations.

Interviewer: What is the most complex report you designed?

Me: Explained

Interviewer: I have two databases one is holding transactional tables and the other one is holding master relations. Now we need master table data with transactional aggregations in a single report. How do you handle this?

Me: We can use Lookup (He asked me in more detail and explained in detail)

Interviewer: Do you have idea on Inheritance concept? Can you give me an example?

Me: Have taken an entity and given example for inheritance

Interviewer: Can you write a code to showcase inheritance example? You can use Dotnet or Java

Me: Written an example

Interviewer: Can you explain what semantic model means is?

Me: Explained

Interviewer: What is SQL injection?

Me: Explained with examples

Interviewer: Your system is compromised and confirmed that there is sql injection attack. What is your immediate action plan?

Me: Explained step by step. Remove access to Database, Down application, data analysis, finding the effected location, reverse engineering, fixing the problem / restoring / rollback , make db online, allow application users.

Interviewer: That’s fine. What are all possible reasons that cause tempdb full?

Me: Explained! Internal, Version Store, User defined objects

Interviewer: You have written a stored procedure as business required. What are all the validations / tests you do before releasing it to the Production?

Me: Explained about code reviews, performance, security, integrity, functionality, Unit, Load, Stress etc

Interviewer: That’s fair enough. You have any questions?

Me: I am actually clear with the job roles and responsibilities; may I know on which area we are going to work? Mean I know usually in any Product organization there are various product engineering groups. I am curious to know which area we are going to work?

Interviewer: Well…. (He explained the detail).

Interviewer: It’s nice talking to you, please be wait outside you may need to go through one more discussion.

I went to visitor lobby, After 10 min got a call from staff member told me that I need to meet a Manager. I need to catch up a shuttle to reach the next building. I reached the venue and was waiting for the Manager. He came to me and took me to the cafeteria. We had a general discussion and he offered me lunch. We both had lunch it took 20 min. He looks very cool, I actually forgot that I was going to attend an interview . After other 10 min we reached his cabin.

Actually I did a lot of home work to remain all successful, failure stories and experiences and prepared well as I have an idea about behavioral discussion. I am sure we cannot face these questions without proper preparations.

Final Interview – 5

![udayarumilli_Interview_Experience_With_Microsoft_R&D_6]()

Interviewer: Hi XXXX, I am XXXX, development manager, can you tell me about your background?

Me: Explained!

Interviewer: Reporting server is not responding and this is because of low memory issue. Any idea about memory configurations for SSRS server?

Me: Explained about Safety Margin, Threshold, Workingset Maximum, Workingset Minimum

Interviewer: What is application pooling?

Me: Explained! Advantages, threshold and timeout issues

Interviewer: Any idea about design patterns?

Me: Explained! Creational, Structural, Behavioral and Dot net

Interviewer: From your personal or professional life what are your best moments in your life?

Me: Answered!

Interviewer: Can you tell me a situation that you troubleshoot a performance problem?

Me: Explained!

Interviewer: Let’s say you are working as a business report developer you have got an email from your manager saying that its urgent requirement. You got a call from AVP (Your big boss) and gave you a requirement and said that should be the priority. How do you handle the situation?

Me: Answered! Justified

Interviewer: You are working for a premium customer and planned for a final production deployment, you are the main resource and 70 % work depends on you. Suddenly you got a call and there is an emergency at your home. What is your priority and how do you handle the situation?

Me: Answered! Justified.

Interviewer: How do you give time estimates?

Me: Explained! Taken an example and explained how I give time estimates.

Interviewer: What is your biggest mistake in your personal or professional life?

Me: (It’s really tough) given a scenario where I failed.

Interviewer: Did you do any process improvement which saved money to your organization?

Me: Yes! I did. Explained the lean project I initiated and completed successfully.

Interviewer: For a programmer / developer what is most important, expertise in technology or domain / business?

Me: Answered! Justified

Interviewer: Can you tell me you initiated a solution something innovative?

Me: Answered!

Interviewer: Have you ever stuck in a situation where you missed the deadline?

Me: Yes it happened! Explained how I managed the situation.

Interviewer: Ok, your manager asked to design, develop and release a module in 10 days. But you are sure it can‘t be done in 10 days. How do you convince him?

Me: Answered! How I do convince.

Interviewer: From your experience what are the top 5 factors to be a successful person?

Me: Answered!

Interviewer: If you get a chance to rewind any particular moment in your life what it would be?

Me: Answered!

Interviewer: If I give you chance to choose one of the positions from Technology Lead, Lead Business Analyst, customer Engagement Lead, what is your choice? Remember we don’t bother about your previous experience will train you on your chosen role.

Me: Answered! And justified

Interviewer: Why you want to join Microsoft?

Me: Answered! Justified

Interviewer: Since you said you have been using Windows and SQL Server and other products from Microsoft, what is the best in Microsoft?

Me: Operating System, yes it is the leader and driving the software world. As per the statistics 95% people start learning computers using Windows OS.

Interviewer: Just imagine if you are given a chance to develop a new product for Microsoft what you develop? Any plans around?

Me: Will build a new R&D team and design a mobile device that works based on artificial intelligence

Interviewer: That’s awesome.

Interviewer: It’s nice talking to you, please wait outside, will call you in 10 min.

After 10 Min wait, a staff member came to me and told me that I may expect a call in 2 or 3 days.

3 Days later when I was in office I missed a call from recruiter . I got a message that she has an update for me. I called her back that when she gave me the good news that they are going to offer. Within next half an hour I received an email with the salary breakup and formal offer letter. I didn’t expect that as we didn’t have any discussion on salary. That was a super deal for which no one can say “NO”. I came out of the room I was like dancing finally 4 weeks of hard work, endless hours of practice it had all paid off. I called my parents and close friends and that’s one of the best moments in my life. Hope this interview experience with Microsoft article will you (SQL, MSBI, SQL Server developers) who wants to prepare for Microsoft interview.

![udayarumilli_Microsoft_Interview_Questions]()

The post An Interview Experience with Microsoft appeared first on udayarumilli.com.